Abstract

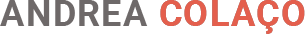

In this project, we construct a virtual desktop centered around the smartphone display with the surface around the display opportunistically used for input. We use a 3-pixel optical time-of-flight sensor, Mime, to capture hand motion. The sensor on the phone allows the table surface next to the phone to be mapped to conventional desktop windows, and the phone’s display is a small viewport onto this desktop. Moving the hand is like moving the mouse, and as the user shifts into another part of the desktop, the phone viewport display moves with it. We demonstrate that instead of writing new applications to use smart surfaces, existing applications can be readily controlled with the hands.

Videos

Scrolling:

Annotation:

Map Navigation:

Collaborator

Hye Soo Yang